How Accurate Data Ingestion reduces Risk and improves profitability

Accio Analytics Inc.

9 min read

Think wrong data is just a tech problem? It isn’t – it’s a risk that could cost big.

For those who manage assets, wrong or late data is not just a small bother – it hits risk control, rule following, and making money hard. A single wrong price can spread through all the work, twist what we know about how well things are doing, break risk rules, and make clients lose faith. Worse, old batch work makes firms miss out on chances and face fines.

The fix? Real-time, data ingestion

This isn’t just a small change; it’s about a big swap in how firms deal with market info, trades, and rules. By using automated data checks, you cut out mistakes that occur with manual checks, react fast to market moves, and lay the base for better choices.

Automatic Help and Mistake Finding

Artificial smarts bring new ease to data ingestion by learning ways and spotting anomalies. Modern systems look at past and new data to mark changes that might show errors. For example, price drops out of its normal range, the system calls out for a check.

Spotting anomalies looks at new data versus old trends. If a mutual fund’s told return is way off from what its holds and market moves say, the system starts a look. This method finds both big and small anomolies that might slip past a human’s eye.

Automatic matching is a big shift. modern systems match trades, spots, and cash moves across systems in minutes, a job that used to take hours of person work. These systems not only spot anomalies but also give fixes and learn from person tips to get better at spotting them next time.

Predictive error stopping pushes automation further ahead. Through looking at past error ways, market states, and the trust in data places, modern setups can see coming issues. For example, when the market is all over the place, the system may do more checks on some feeds or mark data places that were wrong before.

Real-time data feeds and API joining in are key to keep real-time views, more so in fast markets. Streaming data setup lets data move non-stop from many places, like Bloomberg, Refinitiv, and ICE, which give now updates on prices, company moves, and news. Also, trading setups make data on deals as they happen, and keepers give updates on settling and cash moves all day.

Today’s APIs manage tasks like saying who you are, setting data, fixing mix-ups, and trying again, making data flow well even when the network takes a short break.

Event-driven handling lets setups act at once to fresh info. For example, when a deal is done, the system right away updates spots and looks at compliance limits. This is super key in tight market times, where portfolio bosses need now data to make fast choices on adjusting, covering, or using chances.

How Accurate Data Ingestion Reduces Risk

In the financial world, minimizing risk while delivering strong returns is a constant balancing act. Poor data quality can lead to costly mistakes, regulatory headaches, and damaged client trust. Modern data ingestion systems tackle these challenges head-on by identifying and fixing errors before they can derail critical operations. Let’s explore how precise data ingestion methods cut delays and reduce risk exposure.

Reducing Errors and Delays in Data Processing

Delays in batch processing can let errors slip through unnoticed, amplifying both operational and compliance risks. For example, when pricing errors or outdated metrics go uncorrected, portfolio managers may base decisions on inaccurate information. High data volumes often require manual input, which not only slows things down but also increases the likelihood of mistakes. These errors can snowball into issues like flawed hedging strategies, compliance violations, or incorrect client reports.

Modern platforms solve this problem by processing data in real time. Instead of waiting for batch cycles, these systems validate and integrate new data almost instantly. This ensures that as trades settle or corporate actions occur, portfolio positions and risk calculations always reflect the latest market conditions. Advanced validation systems also isolate anomalies at the source, allowing valid data to flow through uninterrupted.

Risk Management Through Automated Validation and Monitoring

Automated validation adds an extra layer of security against data-related risks. Quick error correction minimizes delays, while continuous monitoring ensures data integrity is maintained. Real-time monitoring tools track data flows for unusual patterns, helping to identify potential issues like feed disruptions, system integration problems, or sudden market shifts.

This proactive approach is also a game-changer for compliance. Automated systems maintain detailed audit trails, recording when data is received, how it’s processed, and what validations are applied. These trails reduce the risk of missing confirmations or miscalculating risks. Intelligent anomaly detection further strengthens risk management by learning normal data patterns and flagging both obvious errors and subtle inconsistencies – issues that might go unnoticed by human reviewers during busy periods. By validating data immediately, firms can make better investment decisions and improve profitability.

Traditional Batch Ingestion vs. Real-Time Ingestion

Real-time ingestion offers clear advantages over traditional batch processing, particularly in managing risk and scaling during market surges. Here’s how the two approaches compare:

- Error Finding: Real-time setups find and fix faults fast with auto checks, but batch systems may not spot issues for a while.

- Data Newness: Batch work uses data that might be old, while real-time action keeps data always new.

- Manual Work: Older setups need a lot of human help to see and sort errors, not like new ones with auto checks.

- Rule Risk: Delays in batch work up the risk of not following rules, but real-time fixes cut this risk down.

- Keeping it Running: Old-style setups need planned fixes and take longer to get back to work after breaks. But, automated systems keep going all the time and get back fast using save points.

- Stopping Errors Early: Batch setups let errors move to many places before finding them, but new setups stop problems right where they start.

Real-time setups also handle big workloads well, keeping their cool even when very busy. They may cost more at first, but they save money by cutting out lots of manual fixes, costly rule fixes, and urgent fixes. Better data means smarter buys, making more money over time.

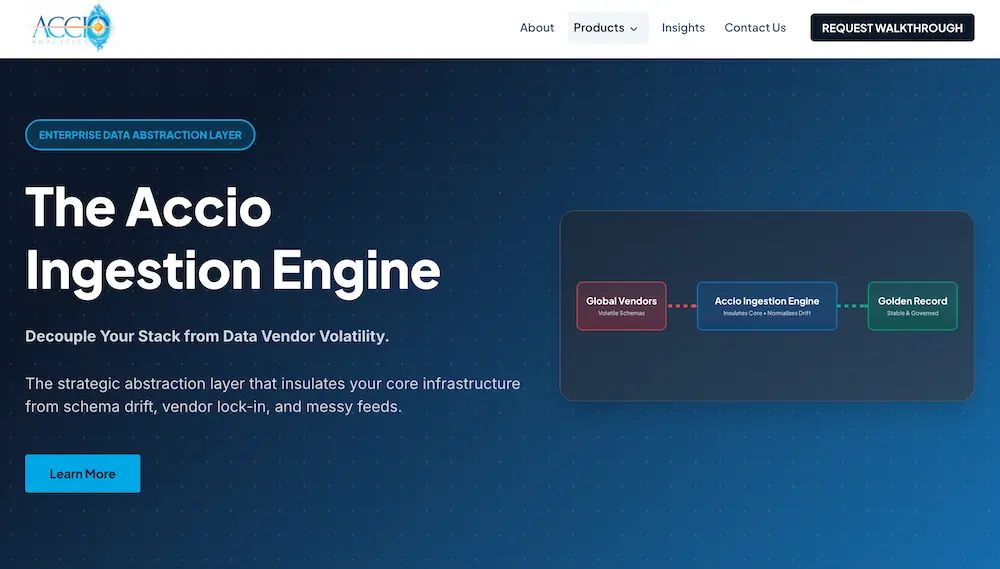

The Accio Ingestion Engine acts as a strategic Abstraction Layer. It creates a standardized "Golden Record" format that your internal systems consume. It receives, interrogates, corrects and then publishes a “Golden Record” that is shared with your downstream systems.

sbb-itb-a3bba55

Making More Money with Good Data

Getting data right is not just to cut risk – it also boosts how much money you make. Clean, real-time data gives asset firms better tools to pick smart investments, cut costs, and find new ways to earn. It’s not only about dodging losses; it’s about making real money gains to boost the bottom line. By focusing on cutting risk, firms can move to more lively, money-making asset handling ways.

Bettering Portfolio Choices

Real-time data changes how managers of portfolios work, letting them fix portfolios right away and react to market changes. This skill is very key in unstable times, where old data can cause risks or lost chances.

Top-quality data feeds machine learning, helping it spot market flaws and suggest smart changes. Also, advanced analysis helps managers see fast which positions bring in money, making it easier and quicker to tweak plans.

Look at Accio Quantum Core’s Returns Agent for example. It gives real-time insight on how each part affects overall returns, letting managers make swift moves. No need to wait for day-end reports – managers can act at once. Plus, the Holdings Agent keeps data on positions right across systems, setting a strong base for both cutting risk and making more money in a smoothly linked setting.

Saving Money Through Automation and Working Better

Right data intake does not just improve portfolios – it cuts costs. Modern tools tools handle daily tasks like checking feeds, matching data, and looking into mistakes. This lets data teams do more important jobs, like deep thinking and talking with clients.

Smooth data paths make it quicker and easier to add new data sources, helping firms react fast to market chances. Cloud-based intake adds more ease, growing or shrinking as needed and cost less on gear and other big spends.

Rules-following is another spot where tools shine. Automated checks and records give quick access to detailed logs during rule checks, lessening the chance of legal issues and keeping costs low. This mix of tools, flexibility, and ready rules-following makes a lean, ready operation that grabs chances while controlling risks well.

Why Use The Accio Ingestion Engine to load and clean data

The Accio Ingestion Engine is a strategic "Data Firewall" designed to insulate a firm’s core infrastructure from the inherent volatility of external data providers. Rather than hard-coding ledgers to a specific vendor’s schema, this engine serves as an enterprise-grade abstraction layer that standardizes disparate feeds into a single, verified "Golden Record." By absorbing the shock of unannounced API changes, header updates, and schema drift at the border, the engine ensures that internal systems receive a consistent, audited stream of data regardless of external fluctuations.

The Accio Ingestion Engine is a strategic "Data Firewall" designed to insulate a firm’s core infrastructure from the inherent volatility of external data providers. Rather than hard-coding ledgers to a specific vendor’s schema, this engine serves as an enterprise-grade abstraction layer that standardizes disparate feeds into a single, verified "Golden Record." By absorbing the shock of unannounced API changes, header updates, and schema drift at the border, the engine ensures that internal systems receive a consistent, audited stream of data regardless of external fluctuations.

This architecture addresses the "Intelligence Gap" found in traditional ETL tools. The engine does not simply move data; it interrogates it using domain-specific financial logic. It proactively identifies missing business days, flags duplicate holdings, and resolves broken hierarchies before they can reach downstream systems. This transitions a firm’s data stack from a series of brittle, manual scripts to a resilient microservices model capable of processing millions of rows per minute while maintaining full regulatory transparency.

Conclusion: Building a Strong, Money-Making Future

To run a safe and money-making business with assets, one key thing is needed: right data input. As we have talked about, good data quality touches everything – from what you do each day with portfolios to your big plans. Bad data quality does not just slow you down; it leads to late trades, big headaches in sticking to rules, lost chances, and fines. On the other side, fast, right data turns problems into chances, making a base for smarter answers.

Modern data systems are changing the game. Instead of old batch work, these systems provide live insights and faster decision making. This change lets firms act fast in quick markets, answering to shifts right as they happen, not later.

The money gains are clear. Auto checks cut mistakes people make, while fast work makes portfolio plans better by giving quick thoughts on market moves and changes in position. Better data not only makes sticking to rules stronger, avoiding the risk of fines and audit problems, but also saves money and grows income.

The market fight is changing fast. Rules are getting stricter, and what clients expect is going up. Firms that invest in accurate data ingestion will be better set to face these challenges.

Related Article:

10 reasons why accurate Data Ingestion is critical for Risk Management

Related Blog Posts

Additional Insights

View All Insights